Wednesday, November 30, 2011

Computer Controlling a Syma Helicopter

Recently, I've been playing with these inexpensive Syma Remote Control Helicopters. At the time they were only $20 (but seemed have been price adjusted for the holidays). They're quite robust to crashes and pretty easy to fly. For $20, they're a blast. The other interesting thing about these copters is that the controller transmits commands using simple infrared LEDs rather than a proper radio. This simplicity makes it tauntingly appealing to try reverse engineering. So tonight, I decided to do a little procrastineering and see if I could get my helicopter to become computer controlled.

For hardware, I've been liking these Teensy USB boards because they are cheap, small, versatile, and have a push-button boot loader that makes iteration very quick. They can be easily configured to appear as a USB serial port and respond to commands. For the IR protocol, I started with this web page which got the helicopter responding. But, the behavior I was getting was very stuttery and would not be sufficient for reliable autonomous control. So, I decided to take a closer look with an oscilloscope to get accurate timing from the stock remote control. Some of my measured numbers were fairly different for the web tutorial I found. But, now the control is fairly solid. So, here is the nitty gritty:

IR Protocol:

- IR signal is modulated at 38KHz.

- Packet header is 2ms on then 2ms off

- Packet payload is 4 bytes in big-endian order:

1. yaw (0-127) default 63

2. pitch (0-127) default 63

3. throttle (0-127 for channel A, 128-255 for channel B) default 0

4. yaw correction (0-127) default 63

- Packet ends with a stop '1' bit

Format of a '1' is 320us on then 680us off (1000us total)

Format of a '0' is 320us on then 280us off (600us total)

Packets are sent from the stock controller every 120ms. You can try to send commands faster, but the helicopter may start to stutter as it misses messages.

Download Teensy AVR Code (updated 11/30/2011)

The code is available at the above link. It's expecting 5 byte packets over the serial port at 9600 baud. The first byte of each packet must be 255, followed by yaw, pitch, throttle, and yaw correction (each ranging from 0-127). It will return a 'k' if 5 bytes are properly read. If it doesn't receive any serial data for 300ms, it will stop transmitting the IR signal.

Unfortunately, I can't help you write a program to communicate over serial since that will depend on your OS (Windows, Mac, Linux) and varies by language as well. But, it is fairly easy with lots of web tutorials. The harder challenge will be figuring out how to update the 3 analog values to keep it from crashing. =o) The most likely candidate is to use a camera (probably with IR markers) to monitor the position of the helicopter. But, getting that to work well is definitely a project unto itself.

Good Luck!

Posted by

Johnny Chung Lee

at

2:57 AM

5

comments

![]()

Tuesday, November 22, 2011

Shredder Challenge - Puzzle 2 done! Onto Puzzle 3

Puzzle 2 is now done! Puzzle 3 is a drawing (not text).

As we get to more complicated puzzles, it's clear that loading, rendering, and UI limitations will become a bigger and bigger issue. My colleague, Dan Maynes-Aminzade ("monzy" for short) is doing his best to figure out ways to handle that. There are a lot of not-ideal solutions.

It's also clear that more computer aided matching will be necessary to maintain progress. Here are zip files for the pieces of problem 4 and problem 5, if you want to try your hand at analyzing them directly.

Puzzle 4 pieces

Puzzle 5 pieces

If you come up with good ideas that work, post them in the comments.

Posted by

Johnny Chung Lee

at

3:45 PM

5

comments

![]()

Puzzle 1 done overnight!

Puzzle 1 was completed overnight! Very cool. They definitely get harder. But, the crowd helped UCSD complete puzzles 2 and 3 within a couple of days. So, we could easily catch up.

On to puzzle 2

Posted by

Johnny Chung Lee

at

7:48 AM

0

comments

![]()

Monday, November 21, 2011

DARPA Shredder Challenge - you could win $50,000

On October 27th, DARPA announced their Shredder Challenge. Try to unshred 5 documents for $50,000. There has been a few notable efforts such as UCSD's web based approach. But, that page has recently been compromised due to malicious users.

With the help of a few colleagues, we had also created a web-based version very early on. But, it was missing some of the login engineering and UI of the UCSD effort. So, we never made it public. But rather than attempt to build a full on competitor, we've decided to open up the tool we built for anyone to try to win the contest themselves!

http://www.unshred.me/1

You can create a private branch of each puzzle if you want to try to give it a go alone, or you can contribute to the main public copy of the puzzle. If our image analysis tinkering goes well, we may add some tools to help make finding matches easier.

NOTE: The deadline for submitting answers to DARPA is December 4, 2011 (11:59PM EST)! Only 13 days left.

GO!

Posted by

Johnny Chung Lee

at

5:23 PM

5

comments

![]()

Tuesday, November 15, 2011

Technology as a story

Generally, I consider myself a technologist. I work in technology, I choose environments that have people who are excellent at it. New technologies make the world move forward. If it is shared broadly enough, it is impossible to "un-invent" a technology and thus the world has been irrevocably changed, even if just by a little bit.

However, what saddens me is when I encounter technologists with the brilliance to create new and wonderful things, but lack a sense of what is beautiful to people. Technology is most often known for being ugly and unpleasant to use, because technologists most often build technology for other technologists.

But to touch millions of people, you have to tell a story - a story that they can believe in, a story that can inspire them. Technology is a tool by which new stories can be crafted. They are not the end product unto themselves. All too often, I find engineers and researchers who are eager to build the technology without understanding the story that goes around about why people should care, why what they built can be inspiring rather than just enabling.

It is not a skill you learn at school. I have encountered people who understand this, and others who don't. I can't say that I have mastered this ability. However, I can at least respect how powerful it can be and strive to be better at it.

As an engineer, as a technologist, as a researcher, or inventor... I encourage you understand the power of stories. A story isn't merely the sequence of events in a book or film. It can be a story about you, and how your life or the lives of the people around you could be a bit different... or how the world could be different than it is today.

It is inspiring to see what a talented artist can do with the very simplest of tools. I recently came across the following video which I feel exemplifies this idea.

When you build something or design something, take a moment to imagine the stories than can be told around what you create and to share that story with others.

Posted by

Johnny Chung Lee

at

8:36 AM

2

comments

![]()

Tuesday, November 1, 2011

The Kinect Effect

This is hands down, the best Microsoft commercial I have ever seen. It has soul. It has spirit. It has open optimism about what a company and creative enthusiasts can do together. They are even showcasing kinect projects on the official website Kinect.com

Bravo.

My hats off the the tens of thousands of creative developers who have explored the wide ranging uses of Kinect and, of course, to Microsoft & Xbox for seeing that it is a very positive thing to embrace. Yes, Kinect is a product that ultimately must make money through games and applications. But, it can also have a remarkably positive impact on our culture.

Best $3000 I ever spent.

Posted by

Johnny Chung Lee

at

2:14 AM

10

comments

![]()

Friday, August 12, 2011

Giving Computers a Human-Scale Understanding of Space

Computer vision and Human-Computer Interaction are just about to hit their stride. Within the past 4 years, the real-time/robotics computer vision research community has made leaps and bounds - much of it out of the Active Vision Group at Oxford and the Robot Vision Group at the Imperial College of London. One of the first pieces of work that really started to impress was PTAM (Parallel Tracking and Mapping) by Georg Klein:

Full up markerless augmented reality had been a long time dream of many. But, few people knew actually how to do it. PTAM was the first system that showed promise that it could handle the rough conditions of real-time motion of a handheld camera.

Also from Oxford, Gabe Sibley and Christopher Mei started demonstrating RSLAM (relative simultaneous localization and mapping) which provides fairly robust real-time tracking over large spaces. The following video uses a head-mounted stereo camera rig:

Just in the past couple weeks, some new projects done with the help of Richard Newcomb show what happens when you combine this tracking ability with either a depth camera like Kinect, or try to do traditional reconstruction from the RGB. These projects are called KinectFusion (a Microsoft Research Cambridge project) and DTAM (Dense tracking and mapping) respectively.

The following video uses a normal RGB camera (not a Kinect camera):

It's important to remember that no additional external tracking system is used, only the information coming from the camera. Also, it's worth pointing out that the 6DOF position of the camera is recovered precisely. So, what you can do with this data reaches well beyond AR games. It gives computers a human-scale understanding of space.

This is pretty exciting stuff. It'll take a little while before these algorithms become robust enough to graduate from a lab demo to a major commercial product. I usually like to say that "people will beat the crap out of whatever you make, and quickly gravitate to the failure cases". But as this work evolves and people begin build useful applications/software on top, it'll be an exciting next few years.

Posted by

Johnny Chung Lee

at

12:06 PM

4

comments

![]()

Tuesday, August 9, 2011

UIST 2011 Student Innovation Contest

UIST 2011 is just a couple months away, and Microsoft Hardware is generously providing the toys again this year. This time it's a touch mouse that provides a full capacitive touch image (which is fairly unique). If you are a student, try to enter, win some prizes and get to meet a bunch of other people interested in interface technology.

Official Contest Page

Posted by

Johnny Chung Lee

at

2:03 PM

0

comments

![]()

Thursday, July 28, 2011

Myth of the Dying Mouse

It's definitely not the most polished delivery I've made (ignite talks don't let you control your slides, which is very unsettling for me). But, here's a 5 minute ignite talk I recently gave entitled "The mouse and keyboard are NOT going away, and there's NO SUCH THING as convergence".

Posted by

Johnny Chung Lee

at

3:06 AM

2

comments

![]()

Friday, May 6, 2011

Kinect Projects - The First 5 Months

Since it's release in November of 2010, there have been thousands of projects use the Kinect camera from independent developers, artists, and researchers. This is just a short montage of a few that I have enjoyed seeing.

Posted by

Johnny Chung Lee

at

1:17 PM

8

comments

![]()

Tuesday, May 3, 2011

Thursday, April 14, 2011

Why your arms don't suck.

Oooooooo..... me likey:

It's rare I see a product demo video and say, "Man, I wish my life would be longer so I can see the amazing future we will have." While I am fairly certain the yet un-purchasable robot above will be the cost of a small house, it is hard to contain my techno-lust. Having worked with a 6DOF robot before, they can be deceptively hard to program and without running a manufacturing line - the immediate utility of owning such a device is debatable. However, if these do end up being adopted by some manufactures, it does potentially reduce both the time to design/produce and the cost to manufacture consumer products. While this means the already blinding rate which new products are released will continue to accelerate, it also means that the bar for producing mass manufactured devices will also come down. As companies adopt re-programmable manufacturing/assembly tools, creating a new product may eventually be a matter of loading new files into all the machines on the floor. I think that's an exciting future and perhaps one day the "Print" button on your computer may take on a much more powerful meaning.

A small educational comment about the arms of this robot. They appear to be 7 degree-of-freedom arms... which is actually the same number of degrees of freedom that your arms have. If you grab a pole, or place your hand on the wall... without moving your shoulder (or your hand), you still have some freedom over the position of your elbow. But why do we need 7 when objects in the world only have 6 degrees of freedom (x,y,z, yaw, pitch, and roll)? The 1 extra degree of freedom is what allows us to reach around obstacles. If we only had 6 degrees of freedom, there would be only 1 way to reach out to pick up an object. So any obstruction along that path would prevent us from getting our food or some tool we needed to survive. Arms that contain 7 degrees-of-freedom have a dramatically larger operating range increasing their utility in uncooperative environments like the real world. For some reason, I find it quite satisfying that there is a mathematical basis for the evolution of our arms.

Posted by

Johnny Chung Lee

at

10:40 PM

5

comments

![]()

Thursday, March 17, 2011

The re-emergence of DIY vs Big Organizations

Wow! Limor (of Adafruit Industries) is on the cover of Wired. Wicked. Congrats, I am filled with envy. =o) Even if she does look a little bit Photoshop'd. =o/ But, nice homage!

|

I have actually put quite a bit of thought toward this topic having recently jumped back and forth between the DIY hobby culture, serious academic research, and massively funded commercial product development. I've had the fortune to observe people trying to make new and interesting things at extremely different scales...from $100 budgets to $100,000,000 budgets.

One thing that I find very consistent: good ideas come from anywhere. The biggest factor in predicting where good work will come from is "how much does this person actually care about what they are working on?" In fact, big budgets and a sense of entitlement can actually hinder the emergence of interesting ideas. Having the *expectation* to do really great work can lead people or organizations to develop tunnel vision on "big" ideas, and miss out on smaller ideas that end up having a lot of impact or dismiss seemingly silly approaches that actually end up working.

There's a really great TEDx talk by Simon Sinek that touches on this. He actually brings up a number of great points in his talk, but the one I want to highlight here is his anecdote about Samuel Pierpont Langley vs. the Wright Brothers in pursuit of powered flight. Langley represented the exceptionally well funded professional research organization, and the Wright Brothers were the scrappy passionate pair of DIY'ers. Today, we now know the Wright brothers as the ones who created the first airplane and most have never heard of Langley. Big investment is not a very strong predictor of valuable output. But, an individual's willingness to continue working on the same problem with very little to no pay... is a good predictor.

The great thing about the hacker community is that, generally, most of them fall into the later category. Independent developers and hobbists care enough to spend their own money to work on the projects they believe in. As a result, I'm finding that the delta in the quality of ideas from a well funded research group, and the independent community (in aggregate) is getting smaller and smaller by the month. Increasingly, the best "hobby projects" surpass the quality level of "true research" work in the same area. This startling lack of contrast (or sometimes inversion) becomes laughably evident when I am reviewing academic/scientific work submitted for publication on a project that uses Kinect, and then the newest Kinect hack pops up on Engadget that simply beats it hands down.

Now, I could simply make a kurmudgenly claim that the quality of professional/research/academic work has gone down. But, I actually don't think that's true. In my opinion, what is happening is that the quality of independent projects are getting better.... fast. Which, I think resonates with this observation of a "DIY Revolution".

But, why is this re-emergence happening now? Wasn't is just a few years ago people were lamenting about how "black boxed" consumer products had gotten, and that the good old days where you could open up a product and futz with the innards in a meaningful way were gone? What's changed to cause this apparent re-birth?

I have a theory.

My Theory about the Re-Emergence of the DIY community:

In the 90's and early 2000's, Moore's law was absolute king. The primary deciding factor in purchasing an electronic product was simply how fast it was. This meant an intense focus on tighter and tighter integration of components and all the functionality was disappearing into tiny little black chips that could not be accessed nor modified by mere mortals. But now, people barely talk about raw "megahertz" or "megabytes" anymore. General purpose computers have gotten "fast enough". We now want specialized kinds of computers: one that fits in our pocket, plays games in 3D, one shaped like a tablet, one that goes in our car, one that can go under water, or get strapped your snowboard and not break. We have reached a surplus in computing power that makes it affordable to build (and buy) devices for smaller and smaller needs. Our imagination for what to do with computing has simply not kept up with Moore's Law. So, we find more uses for more modest amounts of computing power. But, what does this have to do with the DIY community?

A byproduct of having such an immense surplus in computing, is that the tools you can buy within a hobbist budget have also gotten exponentially better in just the past 3-4 years, while the improvement in professional tools have been more modest. The difference in capability between the electronics workbench of a professional engineer and a hobby engineer is getting really really small. Kinect is an overwhelming example of this. The cost of a high quality depth camera dropped nearly 2 orders of magnitude overnight. As a result, hobbists are out pacing many professionals in the same domain simply due to sheer parallelism. Perhaps not as dramatically, but this is happening with nearly all genres of electronic and scientific equipment. One day, maybe we'll see backyard DIY electron beam drilling for nano-machining.

When it is no longer about who has the most resources, it's about who has the best ideas. Then, it becomes a pure numbers game:

Take 10,000 professional engineers vs. 1 million hobbists with roughly equivalent tools. Which group will make progress faster? Now, consider that you have to pay the 10,000 engineers $100K/year to motivate them work, and the 1 million hobbists are working for the love of it. Does that change your answer? Even if it doesn't, you have to concede that there does exist a ratio which will make the output of these two groups equal. It's merely a matter of time.

If you follow me through this argument, which I won't claim to be bullet proof but it explains the trends we are observing quite nicely, then this has an interesting implication on organizations that are currently funding big research groups. When it's simply a matter of who has the best ideas, it's tough to try to employ enough people to get good coverage. You could try to spend a lot of energy on trying to find the "best" people, but that's about as challenging as predicting the stock market. Some inventors simply go "dry" of good ideas and end up not providing a good lifetime return on investment (I fully expect this to happen to me someday. I just hope it happens later rather than sooner.)

So to me, this suggest 3 options for big exploratory organizations:

1. Start tackling more resource intensive problems - things that fundamentally cannot be done today for a few thousand dollars, but at some basic level requires materials, tools, energy, computation, space, manpower that is impossible to obtain at a hobby level. The LHC and space programs are good examples of this. Even if the end goal may be of debatable near term economic value, there is a high probability that unexpected derivative technologies/projects will bring commercial/educational benefits elsewhere.

2. Empower everyone within your organization to do exploratory work. The tools are cheap and "research groups" have no monopoly on good ideas. It's hard to know where lightning will strike, so make sure you encourage it anywhere and hope you haven't missed a spot.

3. Partner with the outside developer community. There is plenty of precedence where using the resources you have to channel the creative power of the masses through the platforms you control can bring a tremendous amount of value if done in an organized manner. It is the rocket fuel that powers companies like Facebook, Twitter, and Groupon to go from non-existence to dominating entities in less than 3 years. The same can absolutely happen with traditional physical electronics and other consumer goods. It simply requires treating your customers as potential partners, rather than assuming they are all potential predators.

Posted by

Johnny Chung Lee

at

4:56 AM

21

comments

![]()

Saturday, February 26, 2011

Display and Interaction work at Microsoft Applied Sciences

As it becomes increasingly cost effective to manufacture a more diverse set of computing form factors, exploring new ways of providing input to a computer and sending output to a user will become an essential part of developing new genres of computing products. The time when raw computing horsepower was the key differentiator passed us several years ago, and the rate of device specialization has shot up dramatically. Less computing power is fine, if it is where you need it when you need it in the form you need it. As a result, you likely see the speed at which wild interface technology research moves into to product also accelerate.

Consequently, if you are a young engineering student. There will be a steady stream of good jobs for people who like to write software for new kinds of input/output devices. =o)

Posted by

Johnny Chung Lee

at

1:26 AM

3

comments

![]()

Monday, February 21, 2011

Windows Drivers for Kinect, Finally!

Yay! This makes me happy. Microsoft officially announces support for Windows Drivers for the Kinect Camera as a free download in the Spring.

This was something I was pushing really hard on in the last few months before my departure, and I am glad to see the efforts of colleagues in the research wing of Microsoft (MSR) and the XBox engineering team carry this to fruition. It's unfortunate this couldn't have happened closer to launch day. But, perhaps it took all the enthusiasm of the independent developer community to convince the division to do this. It certainly would have been nice if all this neat work was done on Microsoft software platforms.

I actually have a secret to share on this topic. When my internal efforts for a driver stalled, I decided to approach AdaFruit to put on the Open Kinect contest. For obvious reasons, I couldn't run the contest myself. Besides, Phil and Limor did a phenomenal job, much better than I could have done. Without a doubt, the contest had a significant impact in raising awareness about the potential for Kinect beyond Xbox gaming both inside and outside the company. Best $3000 I ever spent.

In my opinion, all the press coverage around the independent projects brought a lot of additional positive attention to the product launch. That unto itself became the topic of international news.

But to take this even further, it would be awesome if Microsoft went so far as to hold a small conference to actually showcase people doing interesting projects with Kinect. It is a really great device, and such an outreach program would give Microsoft an opportunity to engage with very enthusiastic partners to potentially build new applications around it both inside and outside of gaming. At the very least, it would be a cheap way to recruit potential hires.

There are lots of smart people outside of Microsoft that would like to build interesting stuff with it. Most of it probably won't be a "Microsoft-scale" business initially, but worth enabling and incubating in aggregate. Though, a large portion of the expert community is already using the Kinect camera in their own projects on just about every OS and every develoment tool in existence. So, Microsoft will need to give researchers and independent developers a reason to go back to thier platform - be it opportunities to engage with people at Microsoft/MSR, other Kinect developers, or opportunities to share thier work though larger distrubtion channels such as XNA, app stores, or XBox downloadable games. We have just seen the beginning of what can be done with low-cost depth cameras.

Posted by

Johnny Chung Lee

at

3:06 PM

9

comments

![]()

Wednesday, February 9, 2011

Low-Cost Video Chat Robot

Since I relocated down to Mountain View, I wanted a good way to keep in touch with my fiance who is still back in Seattle. So, I decided to mount an old netbook I had on top of an iRobot Create to create a video chat robot that I could use to drive around the house remotely. Since it was a good procrastineering project, I decided to document it here.

There are two major components to the project: the iRobot Create which costs around $250 (incl. battery, charger, and USB serial cable) and the netbook which I got for around $250 as well. At $500, this is a pretty good deal considering many commerical ones go for several thousand dollars. The software was written in C# with Visual Studio Express 2010 and only tested on Windows 7 with the "Works on my machine" certifcation. =o) I'm sure there are TONs of problems with it, but the source is provided. So, feel free to try to improve it.

Download Software:

VideoChatRobot v0.1 (posted 2/9/2011)

VideoChatRobot v0.2 (posted 2/11/2011)

Included are the executable, C# source, and two PDFs: one describing installation and usage of the control software, the other more information about modifying the charging station.

The software does a few nice things like try to setup UPnP router port forwarding automatically, queries the external IP needed to make a connection over the open internet, maintains a network heartbeat which stops the robot if the connection is lost, a control password, auto-connect on launch options, and even mediates the maximum acceleration/deceleration of the motors so it doesn't jerk so much or fall over.

The UPnP port forwarding is far from perfect is not well tested at all. If it works for you, consider yourself lucky. Otherwise, ask a friend how to set up port forwarding to enable remote control over the internet.

Once you have all the parts: the netbook, the robot, the serial cable, the software. You can probably be up an running within 5 minutes. Assembly is merely plugging cables together. Mounting the netbook can be done with velcro or tape. Building the rise stand is more challenging, but entirely optional. I happen to have access to a laser cutter to make my clear plastic stand, but you can probably make something adequate out of wood.

Optional: Modifying the Charging Station

Probably one of the more interesting parts of this project from a procrastineering standpoint is the modifcation to the docking station so that it would charge something else in addition to the robot base.

What I did is admittedly pretty crude and arguably rather unsafe. So, this is HIGHLY NOT RECOMMENDED unless you are very comfortable working with high voltage electricity and accept all the personal risks of doing so and potential risks to others. This is provided for informational purposes only and I am not responsible for any damages or harm resulting from the use of this material. Working with household power lines can be very dangerous posing both potential electrocution and fire risk. This is also unquestionably a warranty voiding activity. DO NOT ATTEMPT THIS without appropriate supervision or expertise.

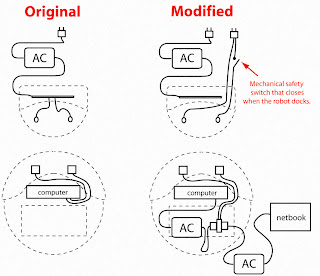

Now that I've hopefully scared you away from doing this... what exactly did I do? A high level picture is shown here:

The PDF document in the download describes changes in more detail. But, I had a lot of trouble trying to tap the existing iRobot Create charging voltage to charge something else. Primarily, because the charging voltage dips down to 0V periodically and holds for several milliseconds. That would require making some kind of DC uninterruptable power supply and made the project much more complex. The easiest way to support a wide range of devices that could ride on the robot was to somehow get 120V AC to the cargo bay... for those of you with some familiarity with electronics, you probably can see the variety of safety hazards this poses. So, again this is HIGHLY NOT RECOMMENDED and is meant to just be a reference for trying to come up with something better.

I actually do wish iRobot would modify the charging station for the Create to officially provide a similar type of charging capability. It is such a nice robot base and it is an obvious desire to have other devices piggy back on the robot that might not be able to run off the Create's battery supply. I personally believe it would make it a dramatically more useful and appealing robot platform.

Usage Notes

At the time of this post, I've been using it remotely for about a month on a regular basis between Mountain View and Seattle. My nephews in Washington DC were also able to use it chase my cat around my house in Seattle quite effectively. Thus far, it has worked without any real major problems. The only real interventions on the remote side have been when I ran it too long (>4 hours) and the netbook battery dies or having the optional 4th wheel on the iRobot Create pop-off which can be solved with some super glue. Otherwise, the control software and the charging station have been surprisingly reliable. Using remote desktop software like TeamViewer, I can push software changes to the netbook remotely, restart the computer, put Skype into full screen (which it frustratingly doesn't have as a default option for auto-answered video calls), and otherwise check in on the heath of the netbook.

Posted by

Johnny Chung Lee

at

1:43 AM

27

comments

![]()

Tuesday, January 18, 2011

"Hi, Google. My name is Johnny."

It was a wild ride, helping Kinect along through the very early days of incubation (even before it was called "Project Natal") all the way to shipping 8 million units in the first 60 days. It's not often you work on a project that gets a lavish product announcement by Cirque du Soleil and a big Times Square Launch party. The success of Kinect is a result of fantastic work by a lot of people. I'm also very happy that so many other people share my excitement about the technology.

Posted by

Johnny Chung Lee

at

1:56 AM

0

comments

![]()

Sunday, January 2, 2011

Khan Academy: The closest thing to downloading knowledge into your brain + a $5000 challenge to you

A few months ago, I rediscovered the Khan Academy after stumbling across a presentation by Salman Khan. If you aren't familiar with this, I recommend making it an absolute priority take a quick scan of some of the videos. Here's a summary video from the website:

Sal has an astonishingly approachable and understandable method of explaining topics in his videos, and also has an incredibly deep understanding of the material he talks about hinting at lower levels of complexity that he might be skimming over, but sometimes revisits in future videos. The Khan Academy videos are, in my opinion, perhaps one of the most interesting things to happen to education in a very very long time. If I may, "disruptive". Anyone with an Internet connection can go from basic fundamentals all the way up to a college level education in many topics in a clear, organized, understandable manner... all for free. His teaching style is more effective along many dimensions than any I have personally experienced in any classroom.

Recently, I've realized that I need to learn more linear algebra. Over the years, I have picked up little bits here and there doing computer graphics, basic data analysis, but I never had a proper understanding of it enough to understand why it really works or more importantly... apply it to solve completely new problems that might be somewhat non-standard. I managed to never take a proper linear algebra course in college or grad school.

So, why do I suddenly care about learning linear algebra now? and consequently should you care? Well if you want to understand how the Wiimote Whiteboard program works, you need a little bit of linear algebra. If you want to understand how video games are rendered on the screen you need linear algebra. If want to understand how Google works, how parts of Kinect work, or how the $1 million dollar Netflix Prize was won, financial modeling, or in general analyze the relationship between two large data sets in the world... you need linear algebra. I'm discovering more and more that any modern sophisticated engineering, modeling, prediction, analysis, fitting, optimization problem now usually involves computers crunching on linear algebra equations. Unfortunately, this fact was never properly explained to me in college so I never prioritized taking a class.

I understood the basics enough to do computer graphics, rendering stuff of the screen (3D to 2D). But much of modern computer vision, is about reversing those equations, going from 2D back to 3D, which involves solving a lot of linear algebra equations to recover unknown data. And I've found that computer vision papers seem to be the worst places to look for a clear explanation of the math being performed. The almost appears to be a desire to see how obtusely one can describe their work.

Fortunately, Khan Academy has over 130 videos on Linear Algebra. Since I knew I would be traveling this holiday, I decided to load up all of the videos on my phone to watch during down time. Watching videos here and there while sitting on the plane, trains, buses, or waiting in lines, I was able to watch all 130+ videos, which cover a 1st year college Linear Algebra course, in about 3 weeks. Pure awesome.

However, the Linear Algebra lectures stopped just as I though it was getting to the interesting part. I was hoping it would get to covering topics such as Singular Value Decomposition, numerical analysis, perspective projections...reversing them, sparse matrices, bundle adjustment, and then real-world application examples. I'm going to order some books on these topics, but I really really love the video lecture format Sal uses in the Khan Academy and wish they continued.

The $5000 challenge:

As an attempt to continue expanding this lecture series in the Khan Academy I want to encourage people who feel like they can give clearly understandable lectures on these topics to pick up where Sal left off. Apparently, there is an informal method of adding your own videos to the academy. I've already donated some money to the Khan Academy (a not-for-profit 501(c)(3)). But, as a call the community, to incentivize people who are able to produce good video lectures on advanced Linear Algebra - for each video posted (and passes the "clearly understandable", Khan academy style, 10 minute video lecture) that continues the Linear Algebra series I will donate $100 to Khan Academy up to $5000. So, not only would you be educating thousands (possibly millions of people), you will be ensuring that your material stays free.

If you do take me up on this offer and do post a video, let me know at johnny@johnnylee.net, and I will review the video. If it passes the bar, I will donate the money and then send you the receipt.

Posted by

Johnny Chung Lee

at

1:37 AM

0

comments

![]()